Grover Cleveland converting the U.S.C. to machine-readable xml markup by hand, 1886.

DRAFT COPY: NOT FOR DISTRIBUTION OR CIRCULATION

Most analyses of Congress ask whether a bill passed. Congress itself does not process bills as indivisible objects. It processes text—amending, stripping, substituting, merging, splitting, and rewriting language at every stage. A bill can fail while its language succeeds, absorbed into an omnibus package or a reconciliation vehicle that bears no resemblance to the original measure. A bill can pass while most of its original text has been removed and replaced. The bill is the unit Congress tracks. The text is the unit Congress actually processes.

A substantial literature has studied legislative outcomes using bill-level data, text similarity, and institutional coding. Scholars have measured bill survival (Yano, Smith, and Wilkerson 2012), identified legislative hitchhikers (Casas, Denny, and Wilkerson 2020), analyzed omnibus strategy and vehicle substitution (Krutz 2001; Sinclair 2016), and tested negative agenda control through scheduling and roll-call exposure (Cox and McCubbins 2005). These contributions are foundational. What they share is a unit of analysis—the bill or the document—and a measurement approach that operates above the level at which Congress actually filters statutory text.

This project constructs what that literature has lacked: an integrated, deterministic, provision-level reconstruction of congressional procedure and text mutation, grounded directly in authoritative XML—the official, structured, machine-readable records published by the Government Publishing Office and the Library of Congress. It formalizes procedure as a finite-state machine with explicit gates. It tracks every atomic unit of legislative text across every version of every bill. It records how text is amended, where it appears, where it disappears, and at which institutional checkpoint it is removed. It classifies the vehicles through which policy travels. And it links all of these layers together at the provision level, so that any paragraph of statutory text can be traced through its full procedural and textual history.

The result is a database that represents Congress not as a collection of bill outcomes but as a structural system—a machine with observable moving parts. The procedural path a bill travels, the gates it encounters, the amendments that modify its text, the vehicles that absorb its provisions, the conference negotiations that rewrite its language—all of these become queryable, measurable, and comparable across Congresses.

With the addition of text embeddings—mathematical representations that capture the meaning of provisions rather than just their wording—the system gains a new dimension. It no longer sees only where text sits and how it moved. It can see when two provisions are attempting the same policy idea, even if they share no language, no sponsor, and no procedural path. Structure becomes measurable. Meaning becomes measurable. Together, they allow the institutional record to inform not just what happened, but what is likely to happen next.

This paper describes the architecture: what the system contains, how its layers integrate, what research it enables, and how it reframes legislative power at a resolution that bill-level data cannot capture.

At its most basic, this project constructs a deterministic reconstruction of congressional procedure and text mutation from authoritative XML.

Deterministic means the output is rule-based, reproducible, and identical across runs. There is no inference layer.

Congressional procedure means the formal steps a bill must pass through to become law—committee referral, markup, floor consideration, conference negotiation, enrollment, and presidential signature. These steps are not metaphors. They are a defined sequence of institutional gates, each controlled by different actors with different authorities.

Text mutation means tracking how the actual words of legislation change as they move through the process. Bills are not static documents. They are living texts that are amended, substituted, stripped, and rebuilt at every stage.

Grover Cleveland converting the U.S.C. to machine-readable xml markup by hand, 1886.

Authoritative XML means the data comes from official government sources—specifically, GovInfo Bill Status XML (which records what happened procedurally to every bill) and GPO Legislative XML (which records the actual text of every version of every bill). These are the canonical records of congressional activity, published by the Government Publishing Office and Library of Congress. XML is a structured format in which every section, paragraph, and amendment operation is explicitly marked, allowing the system to preserve hierarchy and detect change without guesswork.

This system reconstructs what is observable from the official record. It does not capture private negotiations, informal leadership agreements, lobbying influence, or strategic intent that does not manifest in procedural action or published text. It models the institutional machinery, not the invisible politics surrounding it.

The database contains five integrated layers.

Imagine that every bill in Congress is a game piece moving across a board. The board has specific squares—"Introduced," "Referred to Committee," "Reported," "On the Calendar," "Floor Consideration," "Passed," and so on through conference and enrollment. The rules say which squares you can move to from any given position, and the moves are triggered by specific congressional actions: a committee vote, a floor vote, a motion, a referral.

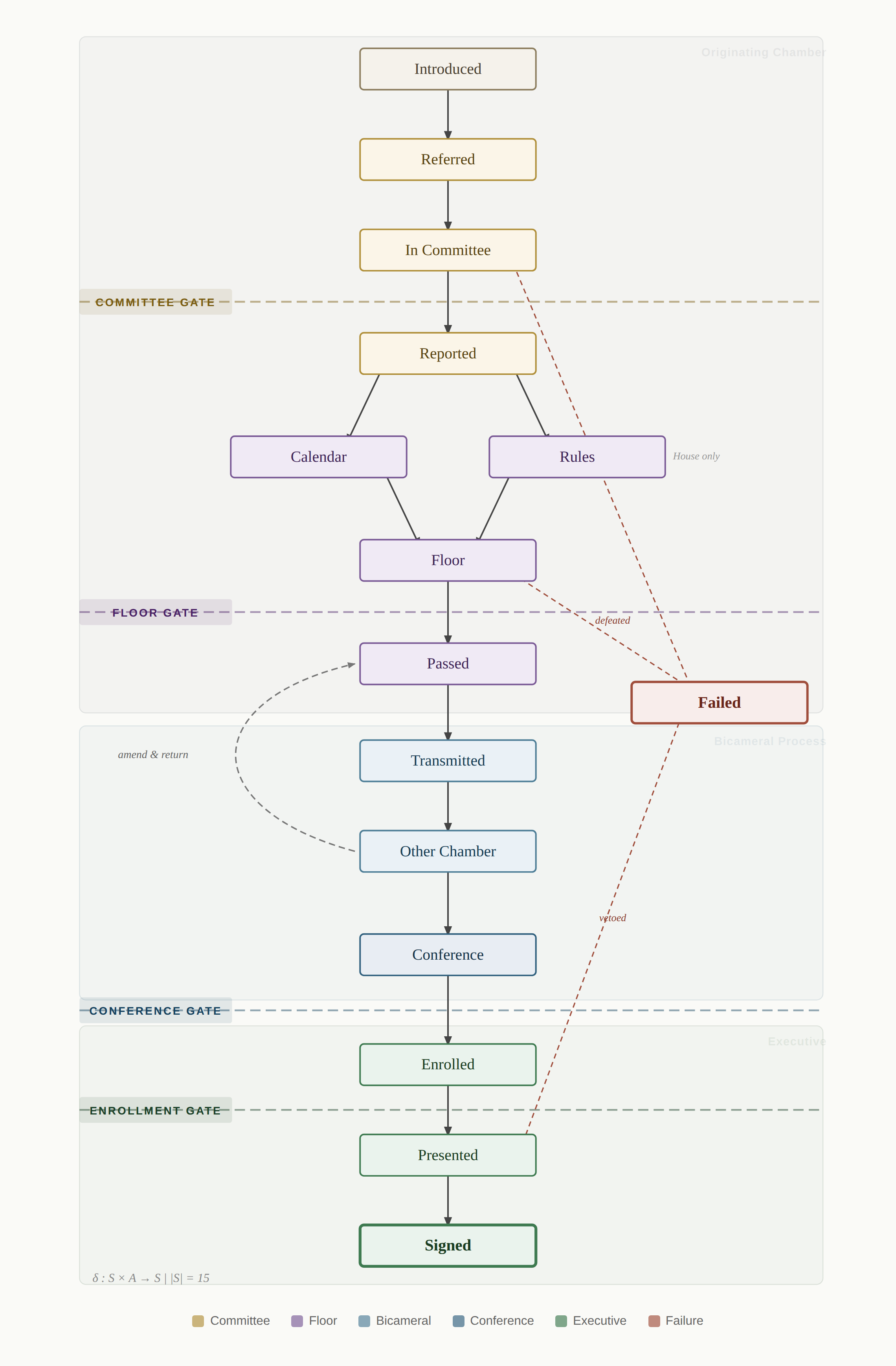

This database reconstructs that entire board for every bill—15 defined procedural states and a transition function that maps each combination of current state and congressional action to the next state. Action codes are normalized into a canonical vocabulary so that surface-level variations in how actions are recorded (the Senate describes things differently than the House) resolve to the same underlying transitions.

This means you do not merely know that a bill "was reported." You know what state it entered, what gate it passed, what transition rule applied, and whether the transition followed the canonical procedural sequence or represented an irregular path. It is a formal, executable representation of procedure—not a narrative summary.

This layer extends empirical tests of institutional theories to procedural topology: measuring whether negative agenda control operates through procedural routing rather than floor coalition building, testing whether majority-party bills encounter systematically fewer gates than minority-party bills, and tracking how gate configurations have changed across decades of institutional reform.

The state machine abstracts procedural states at the level relevant to text filtering. It does not encode every parliamentary nuance or informal leadership intervention.

Figure 1. Procedural Finite-State Machine: 15 States and Authorized Transitions

Derived from Bill Status XML action codes, 110th–117th Congresses. Dashed lines mark institutional gates; dashed arrows indicate failure transitions.

Every bill accumulates institutional veto points. This layer records them as an ordered stack.

Each bill accumulates committee gates, Rules gates (House only), chamber gates, conference gates, and enrollment gates. For each bill, this gate sequence is deterministically inferred from the state machine transitions—not coded by hand, not estimated from partial records.

This lets you compare the obstacle courses different bills face. You can measure gate density (how many institutional veto points a bill accumulates), gate sequencing (the order in which constraints are encountered), and procedural bottlenecks (where bills stall and for how long). Not ideologically. Mechanically.

Gate stacks open direct measurement of phenomena central to theories of congressional gridlock. Has gridlock increased primarily because legislators disagree more, or because the procedural machinery has grown more complex? Do bills fail because they lack majority support, or because they are trapped behind procedural bottlenecks? The gate stack layer allows that decomposition, separating preference-based failure from procedure-based failure in a way that was not previously measurable in systematic form.

When Congress amends a bill, it is not just "changing the bill." Different amendments do different things—some insert new language, some delete existing language, some replace entire sections with new text, some amend other amendments. The database records every one of these operations as a node in a directed graph, with edges connecting each amendment to the text it modifies and to the other amendments it relates to.

Amendment operations are parsed directly from GPO Legislative XML, where insertions, deletions, replacements, and redesignations are explicitly marked using structured XML elements. From this graph, the system computes node-level mutation (which specific text nodes were altered), overwrite ratios (what fraction of base text was replaced versus preserved), replacement depth (how many layers of amendment-on-amendment exist), and branching factor (how many competing amendments target the same text).

Instead of a flat description like "the bill was amended," you get a mutation graph—a complete structural record of how every piece of text changes across authoritative versions, as observable from structured amendment markup and version releases.

Questions about deliberative openness that have been studied through case analysis and aggregate rule-type coding become quantifiable at the amendment level. Have floor amendment opportunities declined over time? Has amendment activity centralized in leadership and committee chairs? How often do substitute amendments completely overwrite a bill's text, bypassing the deliberative process? The amendment topology provides continuous, computable metrics for all of these—amendment depth, branching factor, overwrite ratio—across every bill in every Congress in the dataset.

In modern Congress, much of the most important legislation does not pass through the normal process. Instead, policy is loaded onto "vehicles"—must-pass bills like appropriations, reconciliation bills, or defense authorizations. A bill might be introduced with almost no text (a "shell bill") and then have hundreds of pages of policy inserted later via amendment or conference committee action. Other times, a conference committee produces a report that bears little resemblance to what either chamber actually passed.

The database identifies and classifies these vehicle strategies automatically, using rule-based structural thresholds applied to measurable properties: the ratio of introduced text to final text, the fraction of conference report language absent from chamber versions, the overwrite ratio of substitute amendments.

The classifications—shell vehicles, conference overwrites, strike-all substitutions, reauthorization absorptions—are not probabilistic guesses. The thresholds are explicit, declared, and auditable.

That changes the empirical footing of how legislative strategy is studied. When are vehicles deployed—under divided government, during must-pass deadlines, for controversial policy? How often do conference committees introduce substantial new policy that was never considered by either chamber? Has reconciliation been increasingly exploited for non-budgetary policy? These questions have been studied through case analysis and bill-level comparison (Sinclair 2016; Krutz 2001). This framework extends them to provision-level, XML-faithful, deterministic measurement across the full legislative record.

This layer is structurally central. At the level of individual provisions—paragraphs and subparagraphs of legislative text—the database tracks where every piece of language came from and where it went.

The system defines the atomic text unit as the smallest XML-native, unsplit legislative text element: one leaf node from GPO Legislative XML, identified by a deterministic path that ensures stability across system rebuilds. Each atom links to its structural parent in a full containment hierarchy (atom → paragraph → subsection → section → title → bill), with sibling relationships, cross-references, and dependency edges all explicitly stored and queryable. Lineage is tracked across versions using deterministic rules with explicit method labels—no lineage is ever inferred without recording how it was established.

When text appears between versions without a corresponding amendment record—which happens constantly, because committee markups, manager's amendments, and conference negotiations do not generate structured amendment records—the system detects the appearance by comparing authoritative versions and classifies each insertion by procedural interval.

This makes measurable full survival (provisions that persist from introduction to enrollment), partial incorporation (fragments absorbed into other measures), fragment diffusion (language migrating across bills and policy domains), and preloaded dominance detection (identifying when late-stage text overwhelms earlier deliberative content).

This layer supports some of the most practically significant research the database enables. Casas, Denny, and Wilkerson (2020) identified "legislative hitchhikers"—provisions attached to moving vehicles. Eatough and Preece (2025) and Volden et al. (2024) have measured behind-the-scenes lawmaking and embedded bill credit using text similarity. This framework extends that work by introducing what I call "ghost bills"—formally introduced measures that fail procedurally yet whose language survives through incorporation into enacted vehicles—and detecting them through deterministic atomic lineage rather than similarity scoring. The distinction is methodological: lineage here is established through XML-faithful structural tracking, not probabilistic text matching.

It also enables provenance tracking for enacted law, constructing end-to-end lineage chains from introduced text through amendments, conference reports, and enrolled bills to the U.S. Code sections they modify. And it supports measurement of legislative text reuse and template language diffusion across policy domains and Congresses—identifying the boilerplate provisions, enforcement mechanisms, and definitional frameworks that recur throughout federal statute.

For example, a two-sentence provision establishing a grant program may be introduced in one bill, partially incorporated into a larger authorization measure during committee markup, and finally enacted through a conference report attached to a different vehicle entirely. The lineage layer reconstructs that path deterministically.

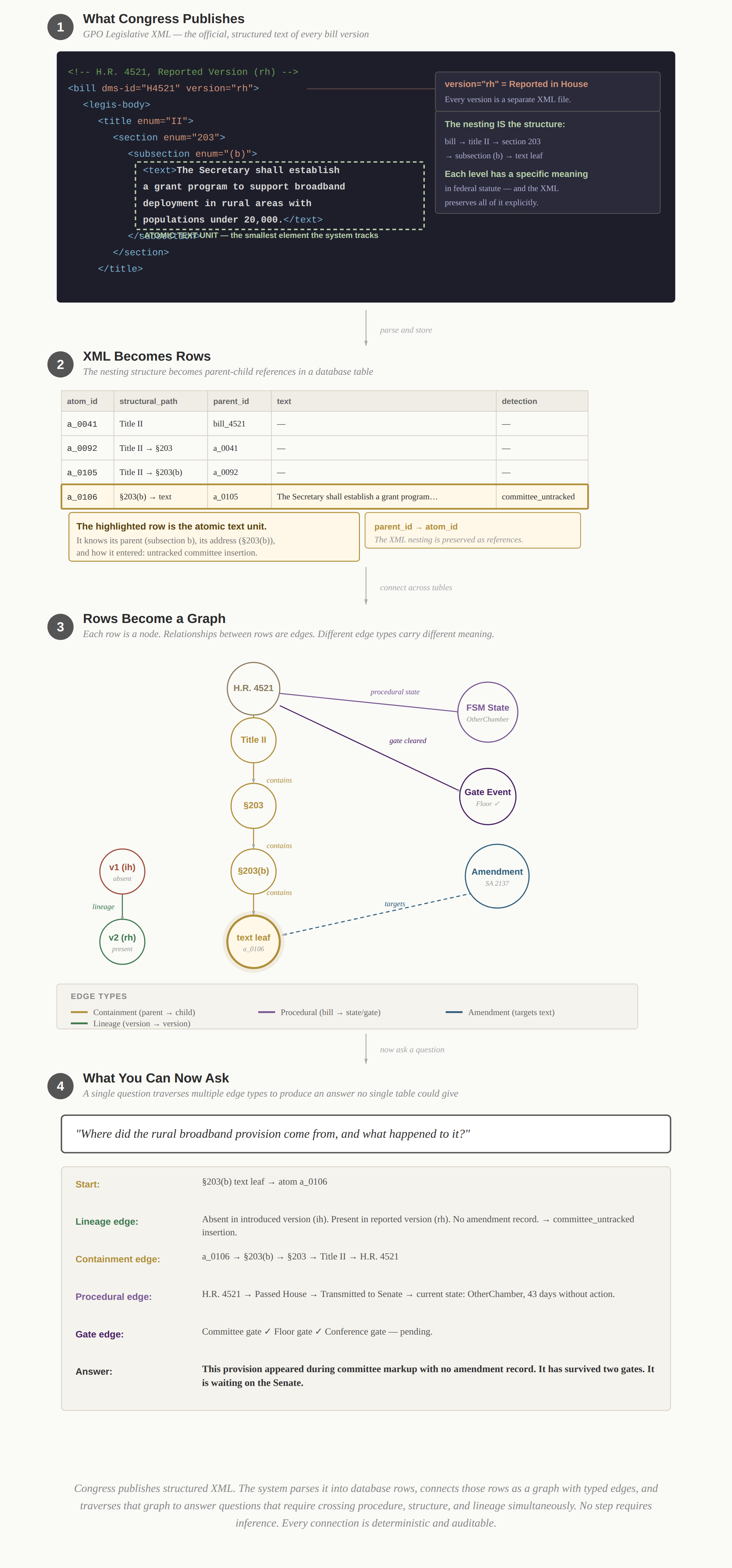

Figure 2. From Congressional XML to Queryable Graph: The Pipeline

One provision traced through four stages: what Congress publishes, how it becomes structured data, how structure becomes a graph, and what you can ask.

The five layers described above are not five separate datasets that happen to live in the same database. They are five different views of the same legislative process, connected at the provision level through shared keys. All five layers share a common coordinate system keyed to the bill and the atomic text unit, which allows traversal across procedure, amendment structure, vehicle classification, and lineage without duplication or inference.

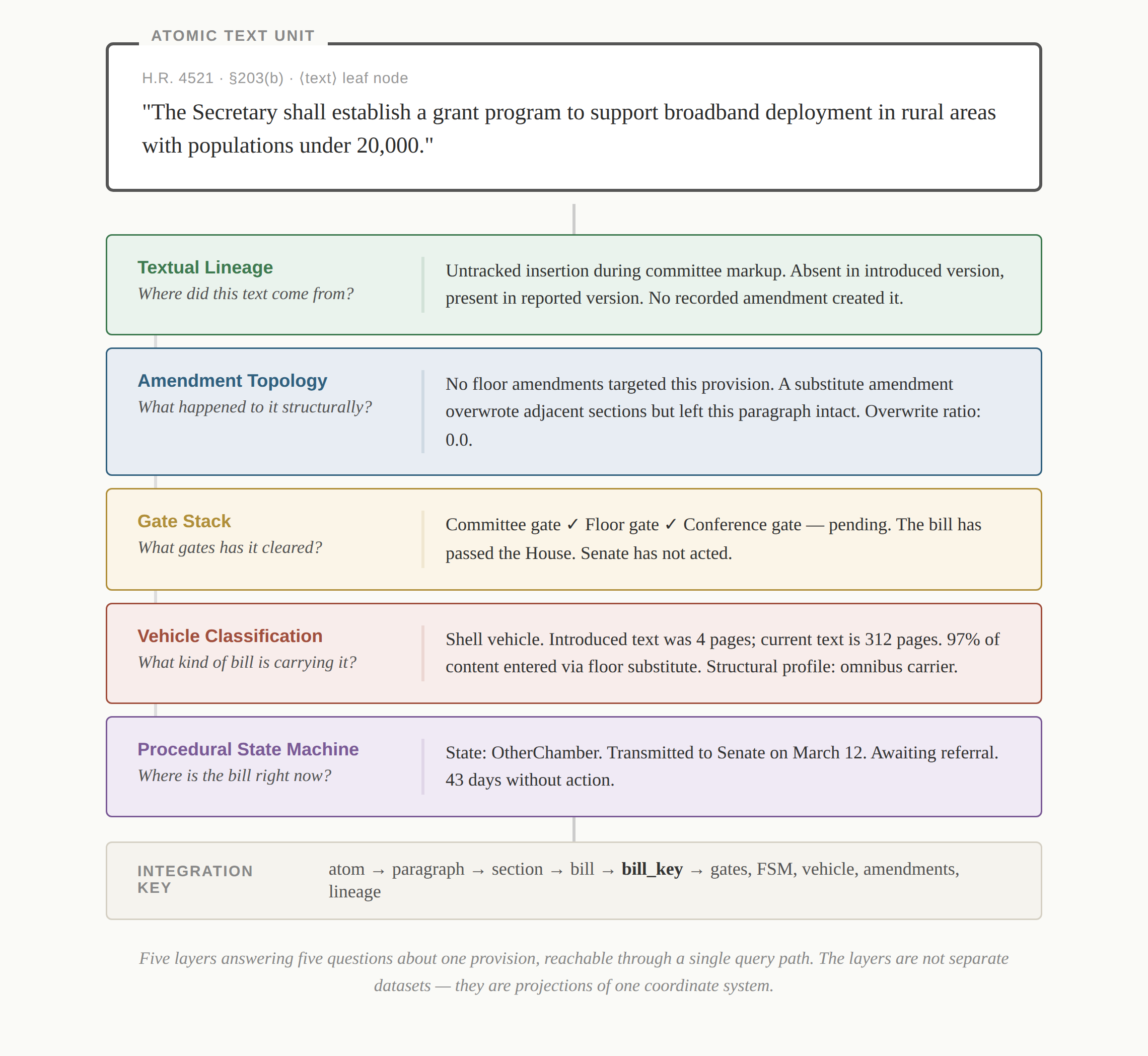

Consider a single atomic provision—one paragraph of statutory text sitting in a bill currently moving through Congress. From that one provision, you can traverse the full database.

The lineage layer tells you this provision appeared as an untracked insertion during committee markup—it was absent in the introduced version and present in the reported version, with no corresponding amendment record. The amendment topology confirms it: no recorded amendment created this text. It materialized between versions through whatever happened in the committee's black box. The gate stack tells you the bill it sits in has cleared committee and floor in the House but has not yet been taken up by the Senate. The vehicle layer tells you this bill has a structural profile consistent with a shell vehicle—minimal introduced text, high overwrite ratio from floor substitution. The state machine tells you the bill is currently in the OtherChamber state, awaiting Senate action.

That is five layers answering five different questions about one provision, reachable through a single query path: atom → structural parent → document → bill → gate events, amendment DAG, vehicle classification, FSM state. The shared key—`bill_key`—is the integration mechanism. The layers are different projections of the same object.

Figure 3. Five Layers, One Provision: An Integrated Query

Each layer answers a different question about the same atomic text unit. All five are reachable through a single traversal keyed to the provision and its bill.

This integration is what makes the database more than a collection of useful tables. A researcher studying conference committee power can start from the lineage layer (which provisions did the conference committee add or remove?), traverse to the amendment topology (were those provisions previously the subject of floor amendments?), check the gate stack (how many gates had the bill already cleared?), and consult the vehicle classification (is this a must-pass vehicle where conference power is structurally amplified?). Each layer contributes a different dimension of the answer. No single layer could produce the full picture.

The same integration makes predictive modeling possible, as described later. A provision's survival prospects depend simultaneously on its detection context (lineage layer), its structural position in the amendment graph (topology layer), the procedural trajectory of its bill (FSM and gate stack), and the vehicle dynamics surrounding it (vehicle layer). These are not competing explanations. They are jointly observable features of the same provision, queryable together because the layers share a coordinate system.

An analogy: imagine trying to understand a city's transportation system. You could survey residents about their commuting preferences. You could count how many people arrive at each destination. Or you could map every road, intersection, traffic light, and on-ramp—the physical infrastructure that determines what routes are even possible. This project maps the infrastructure. The five layers are not five maps. They are one map with five kinds of information overlaid on the same streets.

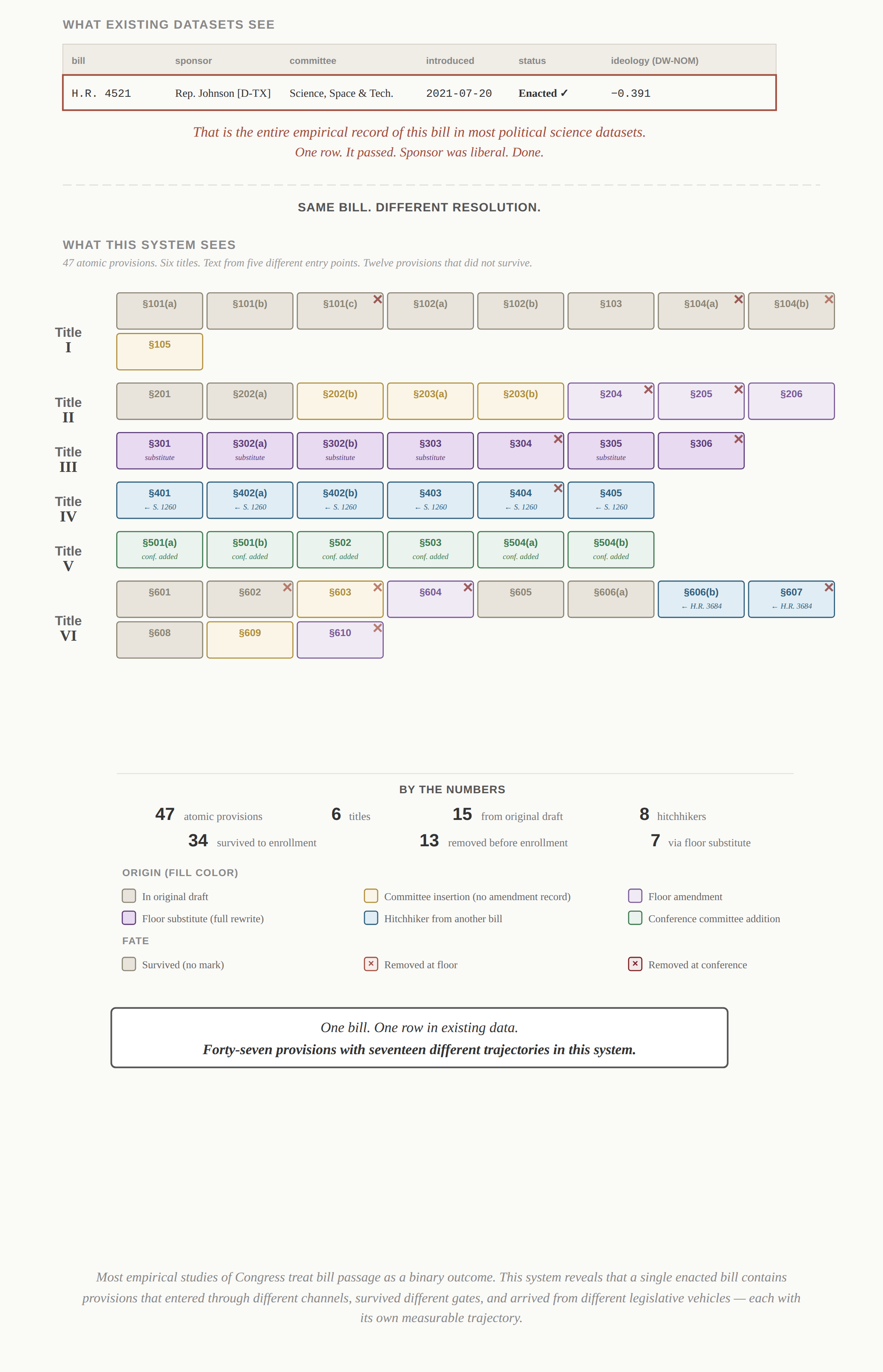

Existing congressional data infrastructure is rich in bill-level outcomes, ideological measurement, and text similarity metrics. What it has not yet provided is an integrated reconstruction of the procedural path legislation travels, the mutation architecture through which text is modified, the absorption mechanics by which policy migrates across measures, and the structural constraints that determine which paths are even possible—all at provision-level resolution, grounded in deterministic XML parsing.

A rich literature has examined legislative survival, omnibus strategy, hitchhikers, and agenda control using bill-level data, similarity metrics, and hand-coded institutional measures. This project does not reopen those domains; it reconstructs them at a different level of granularity. By grounding analysis in atomic XML units and integrating procedural state transitions, gate stacks, amendment graphs, vehicle classification, and cross-version lineage into a replayable system, it transforms questions previously approached at the bill or section level into provision-level, gate-specific measurements. The novelty lies not in the topics, but in the resolution, determinism, and architectural integration through which they are observed.

This database enables a different kind of analysis—structural rather than behavioral, mechanical rather than ideological. Its value extends across multiple audiences and disciplines.

Figure 4. What Existing Data Sees vs. What This System Sees

The same bill at two levels of resolution. One row, or forty-seven provisions with seventeen different trajectories.

For political scientists, the framework opens at least five major research clusters. Institutional power: measuring negative agenda control through procedural topology, decomposing committee power into referral gatekeeping versus amendment gatekeeping, formalizing leadership procedural interventions as graph transformations. Strategic behavior: systematically identifying ghost bills and measuring behind-the-scenes lawmaking effectiveness, classifying vehicle strategies at scale, measuring conference committee text divergence from chamber-passed versions. Temporal dynamics: decomposing gridlock into preference-based versus procedure-based components, tracking amendment openness and deliberative structure over time, measuring reconciliation exploitation for non-budgetary policy. Computational law: building multi-Congress text lineage graphs tracking how the U.S. Code mutates through amendment, measuring legislative text reuse and statutory borrowing, constructing end-to-end provenance for enacted law. Predictive analytics: using gate stack configurations and amendment topology metrics as features for legislative outcome prediction, complementing vote-prediction models with pre-floor procedural filtering.

For journalists and watchdogs, the framework makes procedural manipulation visible and searchable: late-stage insertions, conference overwrites, vehicle substitution, gatekeeping patterns. A transparency dashboard built on this infrastructure exposes gate stacks, amendment topology, and vehicle classifications for active legislation in real time—reducing the information asymmetry between insiders and the public.

For legislative staff, it provides structural insight—where bills structurally fail, which procedural stages absorb the most policy, what typical gate sequencing patterns look like.

For legal scholars and historians, it enables procedural replay of any bill's trajectory, structural comparison across eras, and what amounts to "git blame" for federal law—tracing any enacted paragraph backward through amendment, conference, and vehicle incorporation to its legislative origin.

The framework makes directly measurable:

Increasing amendment centralization over time. Floor amendment opportunities have declined as leadership has deployed more restrictive rules and structured the amendment process more tightly. The amendment topology layer provides the metrics—depth, branching factor, success rates—to measure this directly.

Shifts from committee authorship to conference dominance. The fraction of enacted text originating in conference committee insertions rather than committee-reported bills has grown, concentrating drafting power in a smaller set of actors. The textual lineage layer makes this measurable at the provision level.

Concentration of policy insertion at late procedural stages. Substantive policy increasingly enters legislation at conference or through manager's amendments rather than through the traditional committee-floor-conference sequence. The detection context classifications in the lineage layer reveal exactly when and where text enters the process.

The proceduralization of gridlock. Gate stack depth has increased over time, with bills encountering more procedural veto points regardless of underlying policy agreement. The framework can decompose gridlock into its preference-based and procedure-based components—a distinction central to legislative theory but not previously operationalized at scale.

Rising overwrite intensity in omnibus vehicles. Must-pass legislation exhibits increasing rates of complete text substitution, conference divergence, and shell bill usage. The vehicle inference layer tracks these patterns automatically.

Growth in provision fragmentation and recombination. Policy text is increasingly fragmented across multiple bills and recombined at late stages, making it harder to trace legislative intent or credit authorship. The lineage layer makes this pattern visible.

These are structural findings. They describe the machinery. They do not assign partisan blame.

The first research application of this framework is deliberately narrow: provision survival and incorporation dynamics. It was chosen as the initial target for several specific reasons.

First, provision survival sits at the intersection of three of the framework's five layers—amendment topology, textual lineage, and gate stacks—making it a strong test of the database's integrated capabilities. Second, it requires no predictive modeling, no behavioral theory, and no causal identification, which means the findings rest entirely on the deterministic infrastructure and are not entangled with contested theoretical assumptions. Third, it produces a new dependent variable—provision survival probability—that has not previously been operationalized at provision-level resolution, demonstrating the framework's ability to extend existing measurement approaches to atomic granularity.

The paper asks: conditional on reaching a given procedural stage, how durable is statutory text that appears at different points in the legislative process, and at which gates is that text removed? Using an analytic sample of 412,836 atomic text units across 6,214 bills in the 110th–117th Congresses (2007–2022), it introduces two outcome variables: provision survival probability (whether a specific text unit survives to enrollment) and procedural death gate (the specific institutional checkpoint at which non-surviving text is removed). Preliminary analysis suggests that survival varies sharply by detection context—text first appearing in conference reports survives at substantially higher rates than text traceable to recorded floor amendments—and that different gates systematically filter text from different sources.

The provision survival paper is subject to a rigorous validation methodology centered on XML-faithfulness: gate sequence fidelity is tested against Bill Status XML action codes, paragraph tracking accuracy is measured against Legislative XML structural nodes, and amendment operation recall is validated against amendment XML markup. All validation targets are deterministic, schema-defined, and reproducible. This validation framework applies specifically to the first paper's claims about the deterministic layer and does not extend to the broader research agenda, which will require its own validation strategies appropriate to each research question.

This application is a proof of concept. It demonstrates what the database can do. The broader value of the framework lies in the full range of research it enables—and in the extension described next.

The system described so far reconstructs the institutional structure of Congress—procedure, gates, amendments, vehicles, and textual lineage—from authoritative sources. It tells you where a provision sits in the process, how it got there, what happened to it structurally, and whether it survived.

It cannot yet see meaning. And without meaning, structure alone cannot explain persistence.

Two provisions in different bills, drafted by different offices in different Congresses, may attempt the same policy intervention using entirely different language. The deterministic layer has no way to connect them. They share no structural relationship, no textual overlap, no lineage edge. As far as the database is concerned, they are unrelated.

Prior NLP work has embedded legislative text at the bill or section level for classification and similarity. This system embeds atomic provisions with structural ancestry, integrated into the deterministic procedural graph. The result is a new form of observation: semantic adjacency. An embedding represents a provision as a point in high-dimensional semantic space. Provisions that mean similar things end up near each other, even if they use different words. "The Secretary shall establish a whistleblower protection office" and "A new office for the protection of individuals reporting misconduct shall be created by the Secretary" occupy nearby positions despite sharing almost no vocabulary. This is a measurable relationship—an edge based on meaning rather than structure or text identity.

Before introducing what semantic adjacency changes, it is worth being explicit about what the system already does.

The database is a knowledge graph. Its nodes are atomic provisions, bills, amendments, committees, gates, and Congresses. Its edges are containment (provision sits inside section sits inside title), lineage (this provision descended from that one), amendment operations (this amendment deleted that text), procedural transitions (this bill moved from committee to floor), and cross-references (this provision cites that one).

From a single provision, the system can traverse upward to its bill, across to the bill's amendment history and gate stack, forward to its conference treatment and survival outcome, and backward to prior versions that contained related language. It can move from a provision to its bill's committee, to that committee's historical report rate, to the Congress's political context. These are compound queries that cross multiple edge types—multi-hop reasoning through the institutional graph.

This is already a reasoning engine. Embeddings do not create the graph. They complete it, adding the one edge type it was missing.

The deterministic system contains containment edges, lineage edges, amendment edges, procedural edges, cross-reference edges, and adjacency edges. Semantic proximity is the first edge type that connects provisions based on what they say rather than where they sit or how they got there.

Once meaning becomes measurable, the system can observe phenomena that were structurally invisible:

Policy equivalence across time. Cluster every provision Congress has ever written that attempts the same policy intervention—regardless of bill number or wording. If a grant program has been introduced in 12 Congresses, rewritten each time, dying in committee under divided government but surviving when attached to appropriations vehicles, that pattern becomes visible. The database measures not just whether an idea survives, but under what structural conditions it tends to survive.

Idea persistence across party control. Track whether the same policy concepts reappear under different majorities, in different vehicles, with different structural profiles. Measure whether ideas persist through procedural failure and re-emerge in new institutional contexts.

Paraphrased incorporation. Detect cases where committee counsel rewrote, restructured, or combined provisions from multiple sources into new language that the deterministic layer cannot match. Surface the semantic near-misses that strict textual comparison alone would miss.

Semantic outliers within bills. Identify provisions that are semantically distant from everything else in their bill—potential riders, late-stage insertions from unrelated policy domains, or conference additions that fall outside the bill's original scope.

Semantic distance between stages. Measure how much a bill's meaning changes between committee text and conference text. Quantify the semantic divergence that conference committees introduce, at the provision level, across the full legislative record.

Policy demand mapping. When many bills introduce semantically similar provisions but none advance, the density of attempts signals latent policy demand. Estimate whether that demand is likely to find a vehicle based on historical absorption patterns and current legislative conditions.

Historical analogue matching. For any provision currently moving through Congress, identify its closest semantic analogues in the historical record—provisions that said something similar, in similar structural positions, under similar congressional conditions—and observe what happened to them.

Semantic proximity is a signal, not a finding. It suggests where to look; it does not establish influence or authorship. Structural truth remains grounded in authoritative XML and deterministic validation. The embedding layer expands what can be asked without altering what is known.

The database reconstructs the structure through which legislative power operates. Different audiences encounter that structure differently.

Current legislative tracking tools report what happened—who introduced a bill, where it was referred, whether it moved. This system analyzes every paragraph of a bill against the historical record of how Congress has processed text like it under comparable conditions. It identifies which provisions resemble language that has historically survived committee, which resemble language that tends to be stripped on the floor, and which resemble language that frequently reappears in must-pass vehicles after failing procedurally. A draft bill could be evaluated provision by provision: this section resembles language that historically stalls in committee; this clause resembles language that tends to be rewritten in conference; this structure resembles language that survives only when attached to must-pass vehicles. It estimates whether the structural profile of a bill makes it more likely to become a vehicle, to be absorbed into one, or to stall at a predictable gate. That transforms legislative intelligence from tracking what happened to evaluating, in real time, how the institutional machine is likely to treat a specific paragraph of statutory text.

Advocacy strategy often relies on informal knowledge of committee dynamics and past experience. This system makes those structural patterns measurable. An advocacy organization can see that provisions framed in mandatory regulatory terms have historically been rewritten in conference when originating in a particular committee, while similar policy goals framed as pilot programs have higher survival rates. They can see that policy ideas introduced repeatedly but failing formally often reappear inside omnibus vehicles. Strategy becomes evidence-based—grounded in the observable institutional record rather than anecdote.

Consider negative agenda control (Cox and McCubbins 2005), one of the most influential theories in congressional studies. The theory argues that majority parties prevent measures that would split the majority from reaching the floor. Prior empirical tests have focused on bill scheduling and roll-call exposure—what gets scheduled, what gets voted on. What those tests could not reach is whether negative agenda control operates at the provision level. Do majority party leaders filter entire bills, or specific text inside bills? Are majority-threatening provisions stripped before floor consideration while the rest of the bill advances? Does conference function as a second agenda control mechanism that rewrites provisions that would fracture coalitions? With provision-level survival data tied to procedural gates, these questions become empirically tractable. That forces one of the core theories of legislative organization to be tested at the level it actually operates. This shifts the empirical foundation of legislative theory from bill-level inference to provision-level measurement.

The same applies to the literature on omnibus and unorthodox lawmaking. Existing studies have addressed this through case analysis and bill-level text similarity (Krutz 2001; Casas et al. 2020; Eatough and Preece 2025). With provision-level lineage, scholars can measure what share of enacted text originated outside the final vehicle, quantify how much conference reports diverge from chamber-passed language, and detect when formally failed measures contribute enacted text through the ghost bill dynamic—across the full legislative record rather than selected cases.

Beyond testing existing theories, the framework opens research spaces that did not previously exist. Provision-level policy evolution: how enforcement clauses, definitions, regulatory structures, and grant formulas mutate across decades. Institutional filtering by text type: whether redistributive provisions are filtered earlier than regulatory provisions, whether definitional sections are more durable than enforcement clauses. Structural residuals as political intervention: if a structural survival model shows systematic deviations—provisions that survive despite high predicted death risk—those residuals may signal political intervention, becoming a measurable object in themselves.

For journalists and watchdog organizations, the system makes the institutional machinery inspectable. Which provisions were added after committee markup? Which first appeared in conference? How much of an enacted law originated in bills that never advanced on their own? Which gates filtered which types of policy? These questions have always been answerable in theory for individual bills through painstaking manual research. This system makes them answerable at scale, across the full legislative record, in a format that supports public scrutiny.

Most people look at Congress and see votes. Most data looks at bills and sees outcomes.

This system looks at text and sees filtration, mutation, absorption, survival, and procedural routing—at atomic resolution.

It reconstructs the machine itself—the procedural infrastructure, the textual assembly line, the gates and filters and vehicles through which every word of federal law must pass. With the addition of semantic embeddings, it extends from pure reconstruction into forecasting, connecting the structural record of how Congress has worked to probabilistic estimates of how it is working now.

This is a computable representation of legislative power at the level of language. Not at the level of votes. Not at the level of bills. At the level of the statutory text that institutions actually filter, retain, and enact.

For different audiences—intelligence platforms, advocates, scholars, journalists—the value is different. But the core object is the same: a structural, replayable model of how law is actually assembled—at the level of language, not votes.

Casas, Andreu, Matthew J. Denny, and John D. Wilkerson. 2020. "More Effective Than I Thought: Accounting for Legislative Hitchhikers." American Journal of Political Science 64(1): 5–18.

Cox, Gary W., and Mathew D. McCubbins. 2005. Setting the Agenda: Responsible Party Government in the U.S. House of Representatives. Cambridge University Press.

Eatough, Ella, and Jessica Preece. 2025. "Crediting Invisible Work: Congress and the Lawmaking Productivity Metric." American Political Science Review 119(2): 566–584.

Fenno, Richard F. 1973. Congressmen in Committees. Little, Brown.

Kim, In Song, Carson Rudkin, and Luke Delano. 2025. "Bulk Ingestion of Congressional Actions and Materials Dataset." Scientific Data 12: Article 1067.

Krehbiel, Keith. 1991. Information and Legislative Organization. University of Michigan Press.

Krehbiel, Keith. 1998. Pivotal Politics: A Theory of U.S. Lawmaking. University of Chicago Press.

Krutz, Glen S. 2001. Hitching a Ride: Omnibus Legislating in the U.S. Congress. Ohio State University Press.

Longley, Lawrence D., and Walter J. Oleszek. 1989. Bicameral Politics: Conference Committees in Congress. Yale University Press.

Oleszek, Walter J. 2016. Congressional Procedures and the Policy Process (10th ed.). CQ Press.

Rohde, David W. 1991. Parties and Leaders in the Postreform House. University of Chicago Press.

Shepsle, Kenneth A., and Barry R. Weingast. 1987. "The Institutional Foundations of Committee Power." American Political Science Review 81(1): 85–104.

Sinclair, Barbara. 2016. Unorthodox Lawmaking: New Legislative Processes in the U.S. Congress (5th ed.). CQ Press.

Volden, Craig, Alan E. Wiseman, Thomas Demirci, Haley Morse, and Katie Sullivan. 2024. "Effective Lawmaking Behind the Scenes." Center for Effective Lawmaking Working Paper.

Yano, Tae, Noah A. Smith, and John D. Wilkerson. 2012. "Textual Predictors of Bill Survival in Congressional Committees." Proceedings of the 2012 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies: 793–802.

Weingast, Barry R., and William J. Marshall. 1988. "The Industrial Organization of Congress; or, Why Legislatures, Like Firms, Are Not Organized as Markets." Journal of Political Economy 96(1): 132–163.

The following notes expand on each section of the main text at doctoral-level technical specificity.

The FSM is formally defined over a state set S of 15 elements with a deterministic transition function δ : S × A → S, where A is the normalized action ontology derived from Bill Status XML action codes. The system achieves 99.6% consistency across 6,214 bills (6,187 with no invalid transitions). The 27 inconsistent traces involve procedural irregularities such as re-referral after passage—rare events that the FSM flags rather than suppresses, preserving the audit trail. The transition function is strict: undefined (state, action) pairs halt processing and trigger manual review, ensuring the system never silently misrepresents procedure. The state ontology is deliberately coarser than the full set of possible procedural positions; the 15-state design captures the gates relevant to text filtering while remaining tractable for cross-Congress comparison. The FSM directly enables Paper 1.1 of the research agenda (negative agenda control as measurable gate leverage) by providing exact gate sequences and timing for direct tests of whether agenda control operates through procedural routing.

Gate stacks formalize the institutional veto point literature (Tsebelis 2002; Krehbiel 1998) as computable objects. Each gate Γ(b) = (g₁, g₂, ..., g_m) is inferred from FSM state transitions rather than coded from legislative histories, eliminating coder discretion as a source of measurement error. The four core gates (committee, floor, conference, enrollment) correspond to the primary institutional control points identified in the procedural cartel (Cox and McCubbins 2005), pivotal politics (Krehbiel 1998), and bicameral bargaining (Longley and Oleszek 1989) literatures. Gate density as a metric connects directly to Binder's (1999) legislative gridlock measures but operates at the bill level rather than the agenda level, enabling within-Congress variation in procedural exposure. This layer is the primary substrate for Paper 3.1 (the proceduralization of congressional gridlock), enabling decomposition of gridlock into preference-based versus procedure-based components.

The amendment DAG is constructed by parsing GPO Legislative XML amendment operations and linking each operation to its target text nodes. The graph is directed and acyclic because amendments apply to text states that are temporally prior. Overwrite ratios connect to Sinclair's (2016) observations about substitute amendments and strike-all replacements but provide continuous, computable metrics. Branching factor provides a structural operationalization of amendment tree complexity that complements the Rules Committee literature on structured versus open rules (Oleszek 2016). This layer is the primary substrate for Paper 3.2 (amendment rights and legislative openness over time) and Paper 1.3 (leadership control and procedural manipulation), enabling measurement of how amendment opportunities and leadership procedural interventions have evolved.

The vehicle inference layer formalizes Sinclair's (2016) typology of unorthodox lawmaking and Krutz's (2001) analysis of omnibus legislating as computable structural classifications. Shell vehicles are identified by low introduced-text-to-final-text ratios. Conference overwrites are identified by high conference divergence rates. These thresholds are design parameters, not estimated quantities. This layer is the primary substrate for Paper 2.2 (vehicle substitution and strategic bill shells), Paper 2.3 (conference committee power and text hijacking), and Paper 3.3 (reconciliation exploitation and budgetary procedure).

The atomic text unit—one `<text>` leaf node from GPO Legislative XML—is the observational substrate, stored in `text.atom` with structural parent reference, raw and normalized text, content hash, and ordinal position. The full containment hierarchy is materialized through `text.edge` (parent-child graph), with cross-references in `text.atom_internal_ref` and `text.section_ref_index`, dependencies in `text.atom_dependency`, and adjacency optionally materialized in `text.atom_adjacency`. Version-to-version continuity is established only through explicit lineage rules in `text.atom_lineage`, with permitted methods limited to deterministic_operation, exact_hash_same_address, and unknown. No semantic similarity is used to establish lineage. This layer is the primary substrate for Papers 2.1 (ghost bills), 4.1 (statutory mutation topology), 4.2 (legislative text reuse), and 4.3 (provenance tracking for enacted law).

The provision survival analysis estimates logit(Pr(T(u) = 1)) = α + β₁𝟙[d = floor_tracked] + β₂𝟙[d = floor_untracked] + β₃𝟙[d = conference_untracked] + γX_b + δ_c + ε_ub, with committee_untracked as reference category, bill-level controls (chamber, divided government, bill type), Congress fixed effects, and standard errors clustered at the bill level. The death gate specification uses multinomial logistic models with floor, conference, and enrollment as outcome categories. The sample restriction to bills passing at least one chamber floor ensures a common downstream procedural opportunity set. Causal identification is not claimed; the contribution is descriptive infrastructure. Validation is centered on XML-faithfulness: gate sequence fidelity (FSM-inferred transitions exactly reproducing Bill Status XML action code sequences), paragraph tracking accuracy (framework-inferred presence/absence matching Legislative XML structural nodes), and amendment operation recall (DAG-captured operations matching amendment XML markup). All validation metrics operate on deterministic, schema-defined objects.

The embedding layer adds `analysis.atom_embedding(atom_id, model_id, embedding vector, context_method)` to the schema. Context-enriched inputs are composed by walking the containment tree via `text.edge`, prepending structural ancestry to normalized text—e.g., "Title I — Section 101 — Subsection (b) — Paragraph (2): [text_norm]"—so that the embedding captures both semantic content and structural position. A single leaf-level text node is often too small to embed meaningfully in isolation; the ancestry context resolves this without altering the atomic unit itself.

Retrieval uses a hybrid strategy combining sparse and dense methods. BM25 sparse retrieval operates on `text_norm` via tsvector indexing (or ParadeDB pg_search for proper BM25 scoring), catching exact terminology, statutory citations, and formulaic language. HNSW indexing on pgvector provides approximate nearest-neighbor search on dense vectors, catching semantic similarity across different vocabulary. The two result sets are combined via reciprocal rank fusion. Every retrieved provision carries its full deterministic context—gate stack, amendment history, lineage trajectory, survival outcome—because the relational schema's foreign keys function as graph edges for multi-hop traversal: atom → bill → gate_events → committee_referral → congress → political_context. This traversal is SQL-native and requires no separate graph database.

For predictive modeling, the feature space combines three categories. Structural features from the deterministic layer: detection context, gate position, amendment depth, vehicle type, bill gate density, overwrite ratio. Semantic features from the embedding layer: similarity to historically survived and historically stripped provisions, semantic distance from the rest of the bill, policy domain cluster membership. Congressional context features: majority margin, unified or divided government, Congress-level base rates, committee-specific patterns. Training data comes from historical provision-level outcomes via `text.atom_lineage` and `analysis.atom_diff`. Models estimate provision survival probability, procedural trajectory likelihoods, conference divergence rates, and vehicle absorption probability. The epistemic separation is maintained throughout: embeddings serve retrieval and prediction but never establish lineage or modify structural truth.

The fifteen-paper research agenda spans five clusters (institutional power, strategic behavior, temporal dynamics, computational law, applications) plus three cross-cutting methodological extensions (state legislatures, comparative legislatures, regulatory procedure). Each paper builds on specific layers of the core framework: Papers 1.1–1.3 rely primarily on the FSM and gate stacks; Papers 2.1–2.3 on textual lineage and vehicle classification; Papers 3.1–3.3 on temporal analysis of gate stacks, amendment topology, and vehicle patterns; Papers 4.1–4.3 on lineage graphs and cross-reference resolution; Papers 5.1–5.3 on the integrated framework including the embedding layer. The state legislature extension faces the greatest implementation challenge due to heterogeneous procedural rules and varying XML availability across 50 states. The regulatory procedure extension (modeling notice-and-comment rulemaking as a deterministic FSM) would extend the framework beyond the legislative branch entirely, enabling unified computational analysis of how policy moves from bill text through enacted statute to implemented regulation.